EDITORIALS

The utility of machine learning in the management of patients with peripheral arterial disease

Ravindhran B,1 Thakker D2

Introduction

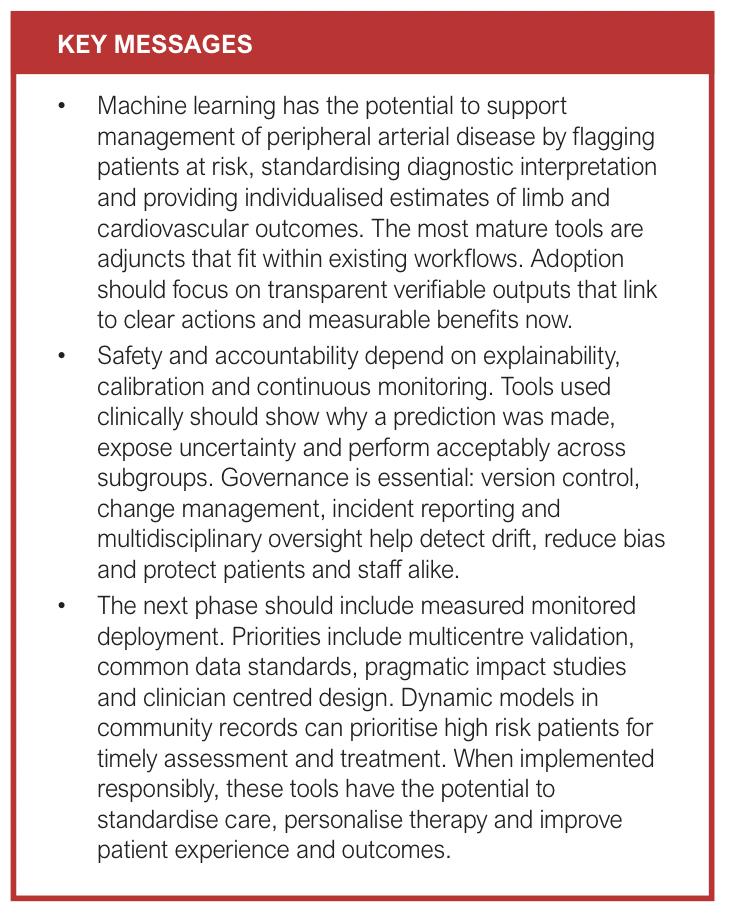

Machine learning (ML) is moving decisively from concept to clinic-adjacent evaluation in vascular medicine. Peripheral arterial disease (PAD) has become a major focus for machine learning because it is clinically heterogeneous and carries a high health and economic burden, making it well suited to data driven approaches for earlier detection and personalised risk assessment. The core promise remains unchanged: algorithms that learn patterns across multimodal data, notes, vital signs, blood results, ultrasound or duplex waveforms, CT and MR angiography, intravascular imaging, prescriptions and longitudinal outcomes can help detect disease earlier, stratify risk more reliably and match treatment to patient and lesion characteristics. The crucial question for vascular surgeons is where that promise stands today. Across diagnostics, risk stratification and outcome prediction, AI/ML is best regarded as a maturing adjunct whose best-performing tools are either in retrospective or quasi-prospective evaluation, with a small but growing body of external validation. The field is now constrained less by raw model accuracy and more by generalisability, clinical integration, explainability and governance.

Diagnostics

Diagnostic applications have produced some of the most tangible early gains. Electronic health record phenotyping and natural language processing have the potential to flag probable PAD from codes, medication patterns, laboratories and free-text notes with performance that is sufficient to support targeted ankle–brachial pressure index (ABPI) or vascular laboratory testing in primary care and diabetes clinics.1–3 Real-world implementation work has started to define how such models can be integrated into care pathways; qualitative and mixed-methods studies emphasise workflow alignment, clinician trust and equity monitoring as prerequisites for sustained use.4,5 In the vascular laboratory, algorithms to classify continuous wave Doppler and plethysmography waveforms are increasingly accurate in laboratory settings and can reduce inter-operator variability, although prospective evidence of the impact on diagnostic accuracy or throughput remains limited.6-9 In imaging, deep learning for CT angiographic segmentation and stenosis quantification continues to advance quickly, with several groups reporting automated arterial tree segmentation, calcium scoring, runoff quantification and lesion severity classification, particularly in the femoro-popliteal and infrapopliteal beds.10–14 These systems show high agreement with expert readers on retrospective datasets, but cross-scanner and cross-centre robustness and prospective clinical utility are still in the process of being established.

Intravascular imaging is benefiting from ML originally developed for coronaries: plaque component detection and calcium quantification on intravascular ultrasound and optical coherence tomography, and early peripheral applications suggest feasibility for reproducible measurements that could guide vessel preparation and device selection. However, PAD-specific clinical validation and device-agnostic performance remain works in progress.15,16 Outside of imaging suites, photoplethysmography captured by smartphones and wearable devices has shown proof-of-concept for PAD screening, but large-scale prospective studies in target populations, with confirmatory testing and cost-effectiveness analyses, are still awaited before routine clinical adoption can be recommended.8,17,18 Taken together, diagnostic AI for PAD is moving from promising retrospective accuracy to early-stage clinical evaluation. In the near term, adoption is best directed towards tasks with outputs that are directly verifiable at the point of care such as waveform classification, stenosis measurements and structured report extraction, while simultaneously building the implementation evidence base.

Risk stratification

Risk stratification for both major adverse cardiovascular events and major adverse limb events is central to PAD care, and this is an area where ML has achieved clinically meaningful performance on large registries and multi-institutional datasets. Recent studies using the Vascular Quality Initiative and other consortia demonstrate that ML can estimate 30-day and long-term risks of major adverse limb events, amputation, reintervention, pour wound healing and major adverse cardiovascular events with very good discrimination and improved calibration relative to traditional scores.19 Multicentre registry analyses have trained and tested models to predict short and long-term adverse outcomes after endovascular intervention, showing reasonable performance and calibration. However, they also highlight the need for external validation and clinically actionable risk outputs. Similar models for bypass outcomes show promise, but generalisability remains challenging due to device and conduit-specific heterogeneity, centre effects, and missingness in key variables (e.g., runoff).20-25 At this stage, selective deployment of validated models to inform shared decision-making and surveillance planning is appropriate provided calibration is satisfactory, probabilities are communicated transparently and local performance has been verified. Subgroup performance should be reported and shown to be acceptable in view of well-established disparities in PAD presentation and outcomes.

Early and delayed outcome prediction

Beyond risk stratification, AI/ML is increasingly used for granular outcome prediction tied to procedural planning and follow-up. Peri-procedural complications such as contrast-associated kidney injury, bleeding and access site problems have been modelled with encouraging retrospective performance.26 Looking further out, lesion-specific models that incorporate CTA features, duplex metrics and lesion morphology are being developed to predict primary patency and target lesion revascularisation, with several reports demonstrating strong internal performance but mixed external validation.27,28 Prediction of wound healing, a particularly relevant domain for chronic limb threatening ischaemia and multidisciplinary limb salvage teams, has been studied using a mix of clinical features, perfusion measurements and wound images. While multiple groups report models with good discrimination, the literature remains fragmented by small sample sizes, heterogeneous definitions of healing and limited external testing. In the absence of interpretable models and standardised endpoints, routine use is not yet justified.29,30 There is an emerging consensus that prediction models deliver greatest value when coupled to modifiable actions – for example, earlier duplex surveillance in patients at heightened restenosis risk and targeted optimisation of perfusion and infection control when healing probability is low, with prospective impact evaluations now a priority.

Current work around the world

Globally, research is converging on three practical directions. First, registry-linked modelling on major analogous datasets is yielding models that can be externally validated and benchmarked across systems, with increasing attention to calibration, decision-curve analysis and net benefit.31 Second, imaging AI is being standardised, with communities working on shared tasks for CTA segmentation and lesion scoring to improve reproducibility across vendors and scanners, and on explainable overlays that let clinicians see which image regions drive a classification. Third, privacy-preserving training such as federated learning is being piloted to overcome data sharing barriers while improving model generalisability across diverse populations and devices.32 Notably, in comparative evaluations, ML systems have outperformed clinicians, demonstrating higher discrimination and lower prediction error than expert assessment or conventional risk scores, moving the field a step closer to reliable adjunctive decision support at the point of care.33 Operationally, clinical deployment is best underpinned by multicentre validation, adoption of standardised data models and terminology to streamline collaboration and, when feasible, federated methods to promote diversity and equity.

Explainable AI

In clinical decision support, explainability means making a model’s predictions transparent and clinically interpretable, both at the individual level (why this output was produced for this patient) and at the broader level (how the system behaves overall and which factors most influence its outputs). Practically, this allows us to see which variables or image regions most influenced a risk estimate, judge whether the rationale aligns with the patient’s presentation, and decide when to accept, qualify or override the recommendation.34 Explainability is now recognised as a safety requirement rather than a research feature. It underpins safe deployment by enabling clinicians to verify outputs, identify potential model errors and maintain accountability in decision-making. For structured (tabular) clinical prediction models, local explanations such as SHAP value summaries, Local Interpretable Model-agnostic Explanations (LIME) plots35 and global feature importance analyses have become standard practice in recent high-quality studies and clinical pilots, enabling clinicians to understand why the model assigns a high risk label to a particular patient.36 For imaging, saliency maps and attention overlays have matured to the point that they can highlight stenotic segments or plaque components that informed the output. Tools intended for bedside use should display their confidence and calibration characteristics, provide example-level explanations and defer to interpretable models when they perform equivalently.31 It is also recommended that ongoing performance monitoring be in place to detect drift and inequities. A ‘near-miss’ reporting culture around AI recommendations, akin to pharmacovigilance, is an emerging recommendation more broadly, so failures are learned from and shared.37

AI governance

Regulatory and institutional governance has advanced materially in the past two years. This reflects a shift from voluntary principles to enforceable mechanisms for AI assurance and post-market oversight. The US FDA finalised guidance on Predetermined Change Control Plans for AI-enabled device software functions in late 2024, clarifying how iterative model updates can be managed within an approved framework, and subsequently integrated that guidance into its AI/ML SaMD (Software as a medical device) resources in 2025.38 These updates clarify regulator expectations for adaptive algorithms and continuous learning systems, which have historically faced uncertainty under fixed-approval pathways. Internationally and in the UK, Good Machine Learning Practice principles jointly articulated by regulators provide direction on data management, training/validation and human factors. Together, these principles emphasise transparency, reproducibility and clinician accountability across the AI lifecycle. At the institutional level, it is recommended that model deployment be overseen by multidisciplinary committees including vascular surgery, data science, ethics, legal, IT security and patient representatives. Such committees serve as local assurance bodies, ensuring that governance responsibilities are shared rather than delegated solely to data science teams. Documentation such as model cards should specify intended use, data provenance, performance across subgroups, known failure modes and update cadence. Explicit traceability between data, model versions and deployment environments should be maintained to support audit and incident review. For continuously learning systems, versioning, change control and re-approval triggers are essential so that the tool in clinic matches the tool that was validated. It is also recommended that local monitoring assess equity, alert burden and clinical workflow effects, with the authority to pause or retire models when harms outweigh benefits.39-40 Embedding these practices establishes a feedback loop between technical governance and clinical safety, aligning local oversight with emerging regulatory expectations.

Adjunct role in practice

Clinician expertise remains central, and the most appropriate framing today is that AI/ML is an adjunct. This framing reinforces clinical accountability and aligns with current regulatory guidance that mandates human oversight for all high-risk AI systems. In diagnostics, it is recommended that algorithms pre-screen and double-check while examination, bedside Doppler and ABPI/TBPI remain foundational. Early evidence suggests that such hybrid workflows preserve diagnostic accuracy while reducing clinician workload and variability. Beyond specialist settings, dynamic ML models embedded in community and primary care records have shown promise in identifying high risk PAD patients in real time, enabling prioritisation for assessment and treatment.41 In shared decision-making, individualised risk estimates expressed as natural frequencies and simple visuals can help patients understand trade-offs between limb salvage strategies, surveillance intensity and the burden of therapy. Presenting outputs in interpretable formats also helps maintain informed consent and patient trust in AI-supported decisions. In stratification, consistent risk labels across teams, vascular surgery, podiatry, wound care, cardiology, can coordinate care so that high-risk patients receive timely interventions. Interoperability and shared data standards are key to ensuring that such risk stratification remains consistent across systems and institutions. Across the continuum, AI/ML can reinforce risk factor management by identifying patients likely to benefit from statin adherence, supervised exercise therapy, smoking cessation support, glycaemic optimisation and foot care education; it is recommended that these digital nudges be embedded within clinician-led pathways to ensure accountability and equity. In this configuration, AI functions as a precision-enabling layer within clinician-led pathways, amplifying preventive care rather than displacing professional judgement.

Pitfalls: black box, equity, and overfitting

The most common pitfalls are now well documented. Black box models may latch onto spurious correlates such as scanner signatures, site effects or documentation quirks, which collapse when deployed elsewhere.42 Overfitting to single-centre datasets remains common, as does inadvertent data leakage that inflates performance.43 Equity concerns are prominent because PAD disproportionately affects patients with diabetes, chronic kidney disease and socioeconomic disadvantage; subgroup performance gaps can widen existing disparities. For these reasons, it is recommended that studies prespecify cohorts, strictly separate training and testing by patient and time, and perform external validation across sites, devices and populations. Calibration should be reported alongside discrimination, and decision-curve analyses should make net benefit explicit. Bias mitigation strategies, reweighting, balanced sampling, threshold adjustment and fairness-aware evaluation are recommended and should be transparently reported.44 Automation bias and deskilling are human factor risks; it is recommended that interfaces display rationale and uncertainty and that clinicians stay in the loop for interpretation and override.45 Embedding these safeguards converts technical validation into operational safety, ensuring models remain trustworthy under real-world conditions.

Reporting and appraisal guidelines

Methodological rigour is improving, supported by new and updated guidance. Collectively, these frameworks mark a shift from ad-hoc study descriptions to structured auditable evidence standards for clinical AI. TRIPOD+AI provides harmonised reporting standards for prediction model studies regardless of whether regression or ML is used.46 DECIDE-AI outlines how to report early clinical evaluation of AI decision support.47 The STARD-AI guideline addresses diagnostic accuracy studies using AI.48 PROBAST+AI updates the risk-of-bias and applicability assessment for prediction models built with regression or ML.49 For randomised evaluations, CONSORT-AI and SPIRIT-AI remain the standards.50,51 Within vascular surgery, uptake of these frameworks by authors, reviewers and editors will promote faster translation by strengthening reproducibility, comparability and clinical relevance. Their consistent adoption will also streamline regulatory submissions and evidence reviews for AI-enabled vascular tools.

Conclusion

The state of AI for PAD in 2026 can be summarised as robust feasibility with islands of readiness. Diagnostic support tools for waveform interpretation and CTA quantification are technically mature and entering early clinical evaluation, with adoption recommended where outputs are transparent and verifiable. Risk stratification for major adverse limb events, major adverse cardiac events, amputation and reintervention is strong enough to support shared decision-making and surveillance planning in many settings, provided that external validation and calibration are documented locally. Prediction of wound healing show promise but require larger, standardised and prospective evaluations before widespread use is recommended. Explainability, governance and equity considerations have moved to the forefront, aided by clearer regulatory pathways and stronger reporting and appraisal guidelines. The immediate trajectory is towards measured monitored deployment with clear assessment of evidence of benefit across outcomes, experience and value. With appropriate safeguards, vascular surgery services are well positioned to introduce targeted AI tools as adjuncts, helping to standardise decisions, personalise treatment and remedy gaps in diagnosis and long-term follow-up for PAD.

Article DOI:

Journal Reference:

J.Vasc.Soc.G.B.Irel. 2026;ONLINE AHEAD OF PUBLICATION

Publication date:

January 19, 2026

Author Affiliations:

1. Academic Vascular Surgical Unit, Hull York Medical School, Hull, UK

2. Centre for Responsible AI, School of Digital and Physical Sciences, University of Hull, UK

Corresponding author:

Bharadhwaj Ravindhran

Academic Vascular Surgical Unit, 2nd Floor Allam Diabetes Centre, Hull Royal Infirmary, Anlaby Road, Hull HU32JZ, UK

Email: bharadhwaj.ravindhran@ nhs.net